Trip report: 'CWI Lectures on Secure Computation' (Amsterdam, NL)

Yesterday we attended the afternoon-part of CWI Lectures on Secure Computation organised by prof. Marten van Dijk. The programme included keynotes from three great external speakers: Srini Devadas from MIT about PAC Privacy, Ingrid Verbauwhede from KU Leuven (and Belfort) on the difficulty of securely implementing cryptographic primitives in hardware, and Shweta Shinde from ETH Zurich, talking about developments around trusted execution environments (TEEs).

PAC Privacy

In the first talk of the afternoon, Srini Devadas presented his work on “PAC privacy”. PAC privacy (PAC stands for “Probably-Approximately Correct”, and originates from Leslie Valiant’s PAC Learning theory) is a framework for measuring and controlling the privacy leakage (paper) of an output of a function.

For example, suppose you want to compute the average salary of a group of people. Although this is an average, it does provide some information about the individual inputs. For example, if the average of three salaries is 3000 euro, we know that the input salaries were all at most 9000 euro. And if we compute the average salary of a set of people, and, subsequently, the average salary of this set of people except one specific person, then from this information we can even exactly derive the salary of this person (a so-called “differencing attack”).

The PAC privacy framework provides an elegant way of adding noise to a function output, such as the average salary, in order to guarantee the privacy of individual inputs. The core idea is to compute the function, say, 100 times, on different randomly selected halves of the dataset. From these 100 outputs, the variance of the output of the function can be computed; in other words, it can be computed how sensitive the output is to the values of individual inputs. From the 100 outputs, one output is selected, and noise is added corresponding to this variance: the higher the variance, the more noise is added. Interestingly, it can be shown that this simple technique satisfies a mathematically definable information-theoretic notion of privacy. Moreover, the technique can be applied to any function in an automated way without requiring a specific mathematical analysis of the function at hand, as would be the case e.g. for differential privacy, which is an alternative (and more well-known) framework for output privacy.

Interestingly for us, Multi-Party Computation and PAC Privacy are complementary: multi-party computation protects the way that a function output (such as a salary) is computed, and PAC Privacy allows to control the privacy-sensitivity of the computed output. Thereby, PAC Privacy can be an alternative to techniques such as redacting outputs or using differential privacy. Srini Devadas gave a very inspiring and hands-on talk that certainly inspired us to try out these techniques and see if we can add them to our disclosure risk control toolbox!

Current trends in TEEs: more performance, less security

Shweta Shinde talked about trusted execution environments (TEEs), a topic on which the organiser, Marten van Dijk, (and also together with Srini Devadas) did foundational research work in the early 2000s.

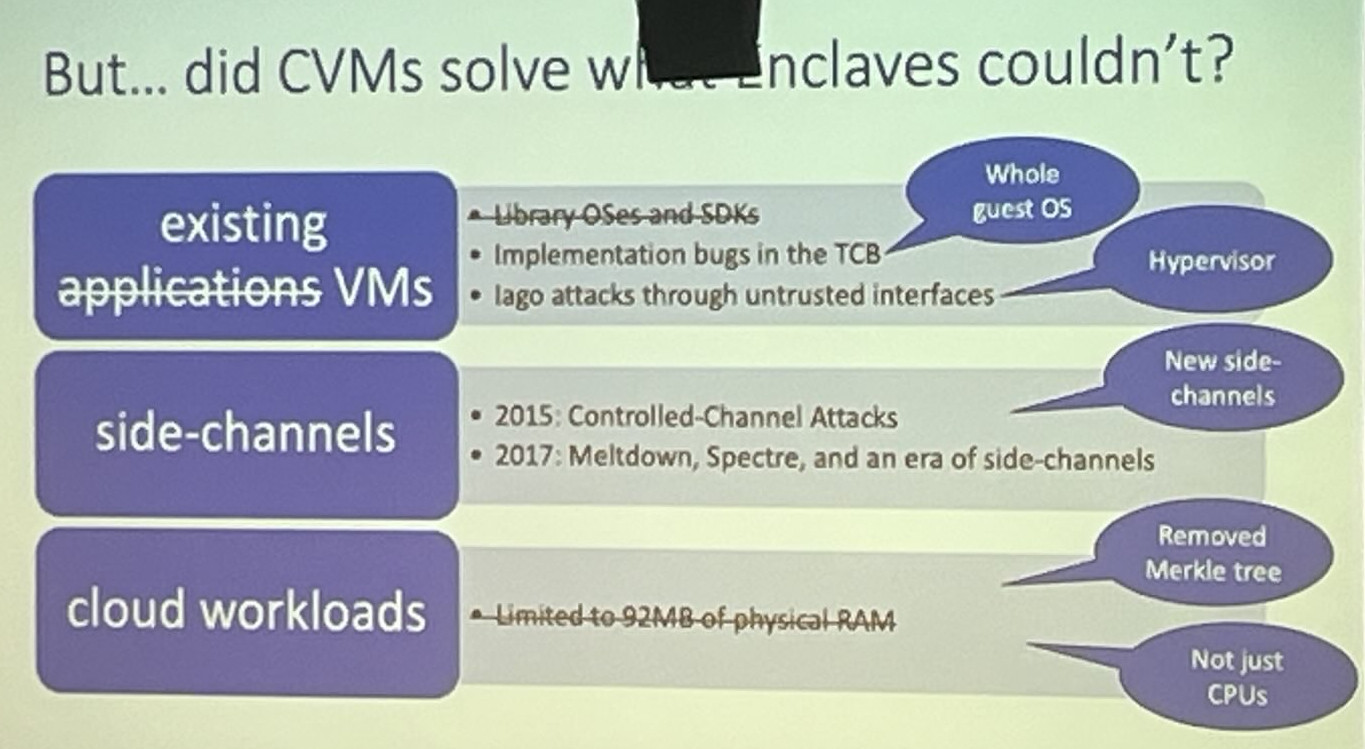

She sketched the timeline of commercial TEEs: first-generation TEEs like AMD PSP / ARM Trustzone (2013), second-gen enclave-based designs like Intel SGX (2015), and the current, third-gen confidential virtual machine based designs like AMD SEV-SNP, Intel TDX (2021) and Arm CCA (2021).

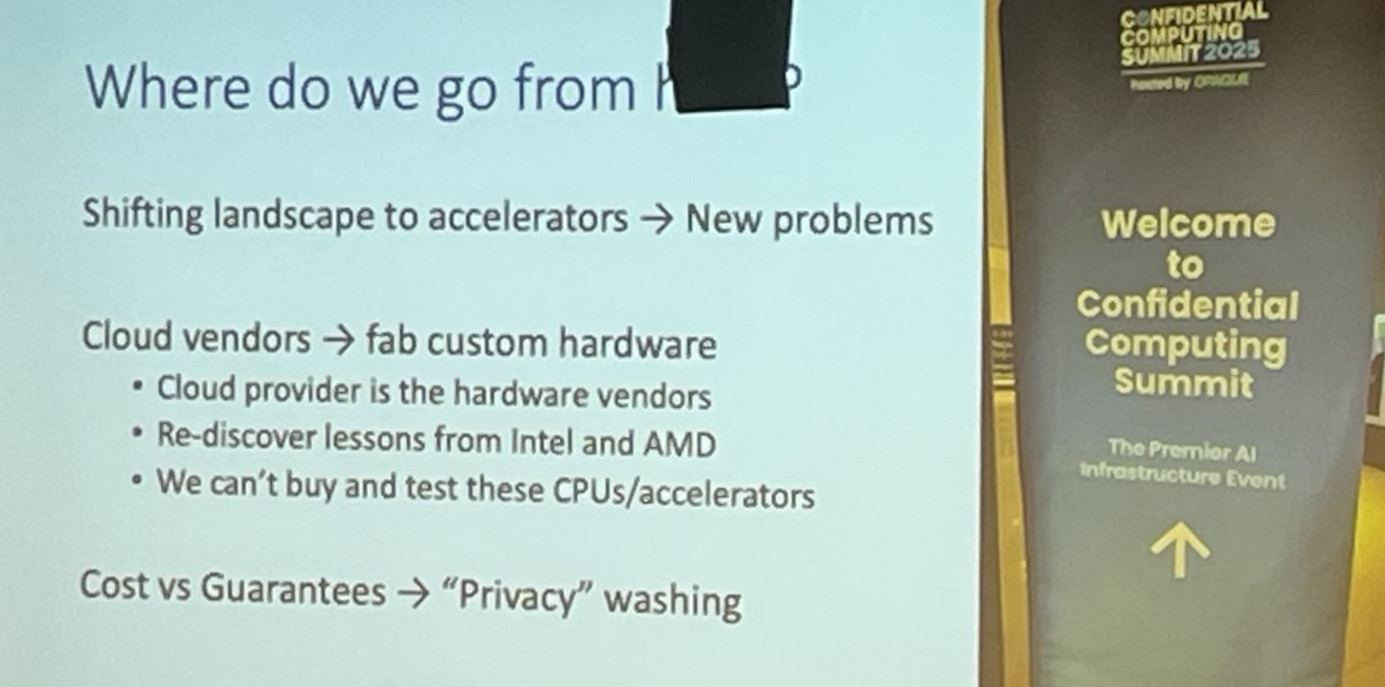

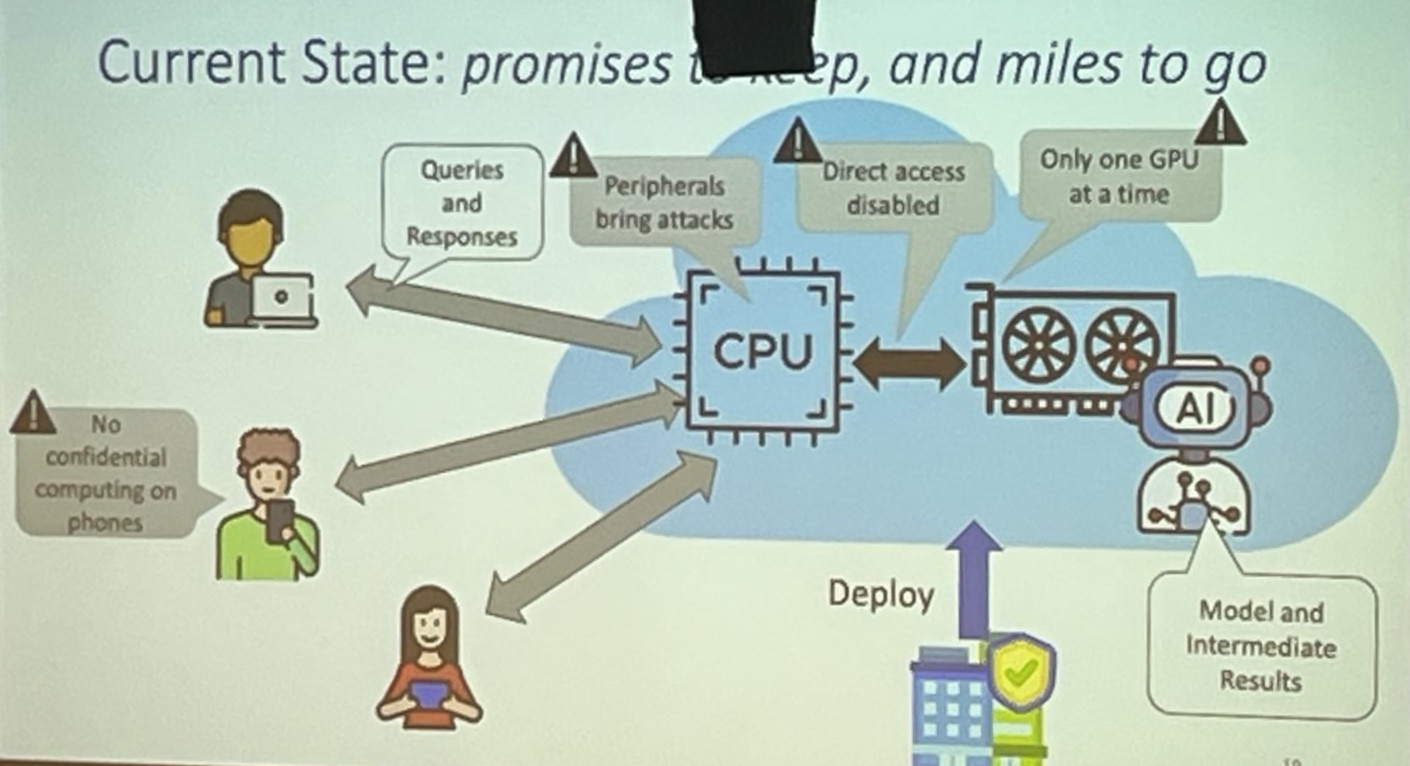

Shinde argued that the current focus is on easier integration in the existing stack (containers/VMs), thereby significantly enlarging the attack surface, and on extending the TEE towards hardware peripherals like GPUs. To improve performance, TEE designs are in fact being weakened (e.g. the removal of the use of a Merkle Tree for memory integrity).

Another interesting point raised by Shinde, is that in the past Cloud Vendors would buy commercial-of-the-shelf TEE processors (from, say, Intel or AMD), allowing for independent verification (of those processors) by third parties. Nowadays, the Cloud Vendors design their own silicon (e.g., Azure Cobalt, AWS Nitro), meaning that researchers can no longer independently verify these processors.